Now that JBoss AS 7.0.1 has been released which includes messaging and MDB's I thought we would write a quick tutorial on how to get started deploying JMS resources and MDB's.

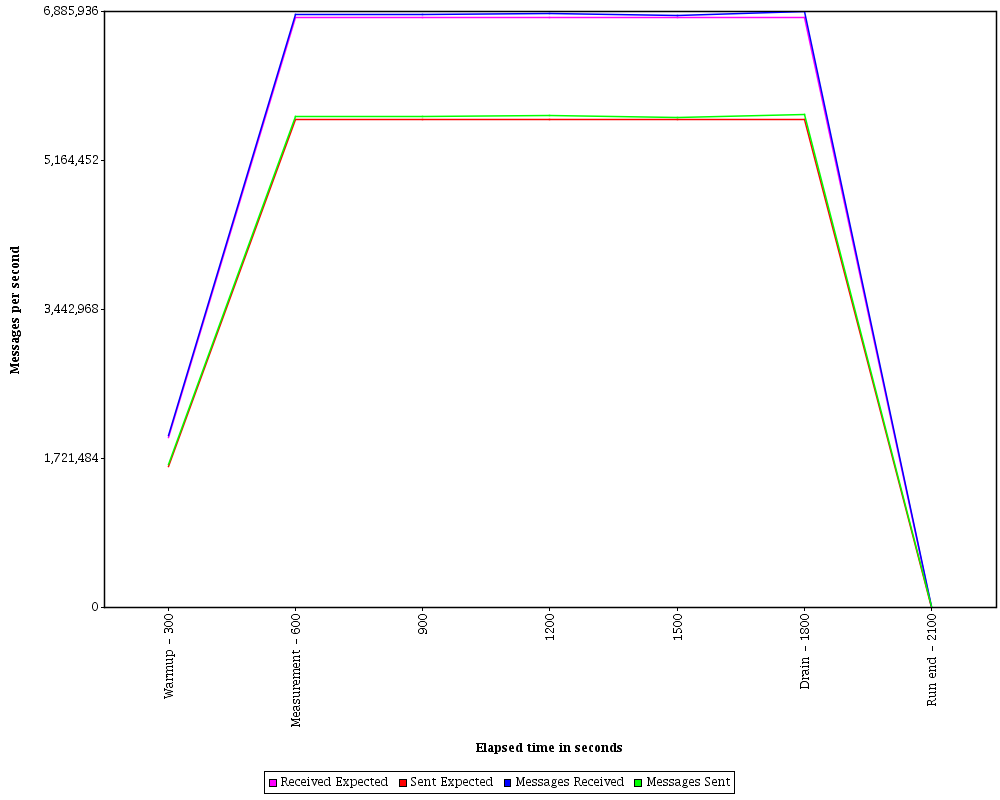

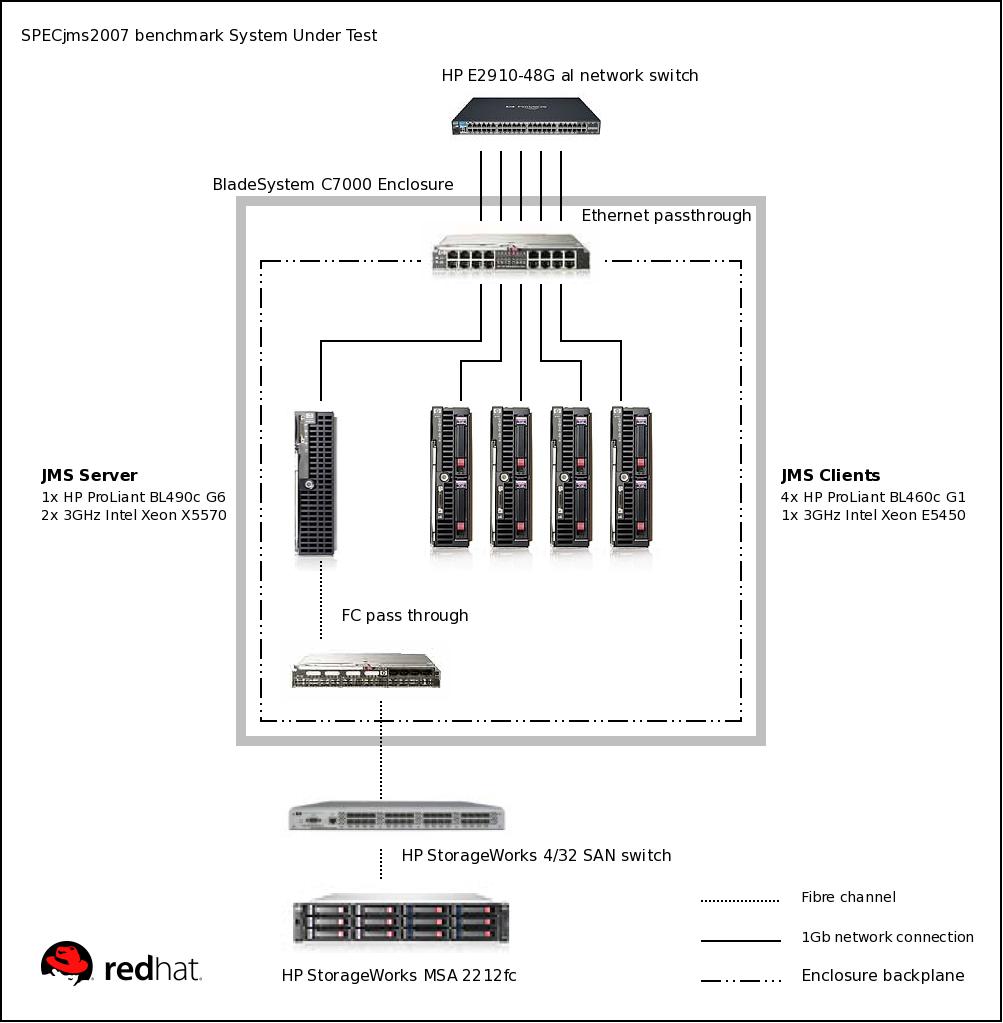

We have recently blogged about our achievements on SpecJMS and EAP 5.1.2 and of course the version shipped with AS7 has all the same functionality and performance levels that are available in the EAP platform.

This tutorial will demonstrate how HornetQ is configured on AS7, I Will explain the main concepts of how to configure HornetQ server configuration and JMS resources and also provide an example MDB that we can run. So first of all you will need to download AS7 from

here.

Make sure you download the 'everything' version as the web profile does not contain messaging or MDB's by default.

In AS7 there's is a single configuration file, either

standalone.xml or

domain.xml, which is broken into subsystems. These files are pretty much identical although there are differences however this is beyond the scope of this article. For more information on AS7 and its configuration take a look at the AS7 users guide

here.

By default the messaging subsystem isn't enabled however a preview configuration is provided that does contain a messaging subsystem. these are standalone-preview.xml and domain-preview.xml, for this tutorial we will use the standalone-preview.xml. To run the preview configuration simply execute the command from the bin directory:

./standalone.sh --server-config=standalone-preview.xml

You should see the HornetQ server started along with some JMS resources, quick wasn't it. Now lets take a closer look at the messaging configuration itself. Each subsystem has its own domain named that is defined by a schema, the schema for the messaging subsystem can be found in docs/schema/jboss-as-messaging_1_0.xsd in the AS7 distribution.

If you search for

jboss:domain:messaging in the standalone-preview.xml you will find the HornetQ subsystem configuration.

If you have used HornetQ standalone or in JBoss 6 you will be familiar with some of the configuration. The first part is basically the same as in the

hornetq-configuration.xml file. This looks like:

<!-- Default journal file size is 10Mb, reduced here to 100k for faster first boot -->

<journal-file-size>102400</journal-file-size>

<journal-min-files>2</journal-min-files>

<journal-type>NIO</journal-type>

<!-- disable messaging persistence -->

<persistence-enabled>false</persistence-enabled>

<connectors>

<netty-connector name="netty" binding="messaging">

<netty-connector name="netty-throughput" binding="messaging-throughput">

<param key="batch-delay" value="50">

</netty-connector>

<in-vm-connector name="in-vm" id="0">

</in-vm-connector>

<acceptors>

<netty-acceptor name="netty" binding="messaging">

<netty-acceptor name="netty-throughput" binding="messaging-throughput">

<param key="batch-delay" value="50">

<param key="direct-deliver" value="false">

</netty-acceptor>

<in-vm-acceptor name="in-vm" id="0">

</in-vm-acceptor>

<security-settings>

<security-setting match="#">

<permission type="createNonDurableQueue" roles="guest">

<permission type="deleteNonDurableQueue" roles="guest">

<permission type="consume" roles="guest">

<permission type="send" roles="guest">

</permission>

</permission>

<address-settings>

<!--default for catch all-->

<address-setting match="#">

<dead-letter-address>jms.queue.DLQ</dead-letter-address>

<expiry-address>jms.queue.ExpiryQueue</expiry-address>

<redelivery-delay>0</redelivery-delay>

<max-size-bytes>10485760</max-size-bytes>

<message-counter-history-day-limit>10</message-counter-history-day-limit>

<address-full-policy>BLOCK</address-full-policy>

</address-setting>

</address-settings>

This is the basic server configuration and the configuration of connectors and acceptors. The only difference here to the standalone HornetQ configuration is that the connectors and acceptors use bindings rather than explicitly defining hosts and ports, these can be found in the

socket-binding-group part of the configuration.

For more information on configuring the core server please refer to the HornetQ user manual.

The rest of the subsystem configuration is all JMS resources. firstly you will see some JMS connection factories of which there are two types. Firstly basic HornetQ connection factories:

<connection-factory name="RemoteConnectionFactory">

<connectors>

<connector-ref connector-name="netty"/>

</connectors>

<entries>

<entry name="RemoteConnectionFactory"/>

</entries>

</connection-factory>

These are basically normal connection factories that would be looked up via any external client and controlled via HornetQ itself. Secondly you will see pooled connection factories, like so:

<pooled-connection-factory name="hornetq-ra">

<transaction mode="xa"/>

<connectors>

<connector-ref connector-name="in-vm"/>

</connectors>

<entries>

<entry name="java:/JmsXA"/>

</entries>

</pooled-connection-factory>

These are pooled connection factories and although connect to HornetQ the connections themselves are under the control of the application server. If you have previous experience with older versions of the application server this is the connection factory that would be typically defined in the

jms-ds.xml configuration file.

The pooled connection factories also define the incoming connection factory for MDB's, the name of the connection factory refers to the resource adapter name used by the MDB, in previous Jboss application servers this is typically the configuration found in the

ra.xml config file that defined the resource adapter.

Lastly you will see some destinations defined like so:

<jms-destinations>

<jms-queue name="testQueue">

<entry name="queue/test"/>

</jms-queue>

<jms-topic name="testTopic">

<entry name="topic/test"/>

</jms-topic>

</jms-destinations>

These are your basic JMS Topics and Queues where entry name is their location in JNDI.

Now lets take a simple MDB example build and deploy it and configure the server for it. A sample MDB and client can be found

here and uses Maven to build. Download it and run

mvn package to build the application ear file.

The example is a simple request/response pattern so before we deploy the MDB we need to configure 2 queues mdbQueue and mdbReplyQueue like so:

<jms-queue name="mdbQueue">

<entry name="queue/mdbQueue"/>

</jms-queue>

<jms-queue name="mdbReplyQueue">

<entry name="queue/mdbReplyQueue"/>

</jms-queue>

now restart (or start) the Application Server and copy the ear file from mdb/mdb-ear/target to the standalone/deployments directory in the AS7 installation. you should now see the mdb deployed. Now we can run the client, simply run the command

mvn -Pclient test and the client will send a message and hopefully receive a message in reply.

Congratulations, you have now configured HornetQ and deployed an MDB.